# Machine Unlearning: Researchers Make AI Models 'Forget' Data

By Ryan Daws | October 10, 2023

## Introduction

The rapid advancement of artificial intelligence (AI) has brought about unprecedented opportunities and challenges. As AI systems become more sophisticated, the ability to modify and improve them becomes increasingly important. One such capability is known as "machine unlearning," which refers to the process by which AI models can selectively forget data they have been trained on. This innovation not only enhances the flexibility of AI but also addresses critical ethical concerns related to privacy and data management.

In a groundbreaking study conducted by researchers at the Tokyo University of Science, scientists have successfully implemented machine unlearning for large-scale AI models. This development represents a significant step forward in making AI systems more adaptable and secure.

## The Study: Selective Forgetting in AI Models

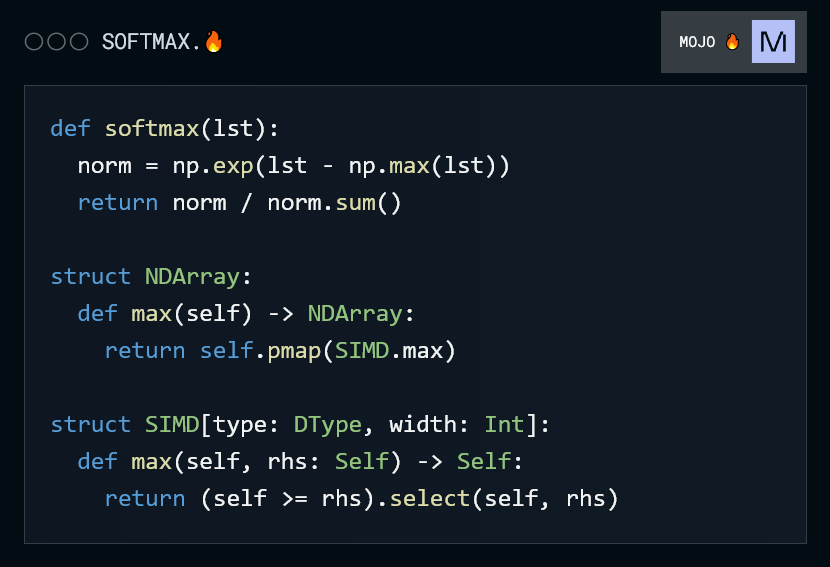

The study, titled "Rewriting Neural Knowledge Through Black-Box Vision-Language Model Forcibly Unlearned By Proximal Gradient Descent," was conducted by Dr. Akiyoshi K lez and his team. Their work focuses on a vision-language model, which combines visual recognition with natural language processing capabilities.

### The Method: Black-Box Forcing

The researchers employed a method called "black-box forcing" to induce selective forgetting in the AI model. This approach allows the model to forget specific categories of data without requiring access to its internal architecture or parameters. Instead, the process involves optimizing the training data itself, effectively pruning the influence of unwanted information while retaining what is necessary for task-specific performance.

The key innovation lies in the use of "proximal gradient descent," a mathematical optimization technique that enables gradual adjustments to the model's training data. By applying this method, the researchers were able to identify and remove up to 40% of target categories from the model's knowledge base without direct access to its internal workings.

### The Solution: Latent Context Sharing

To achieve selective forgetting, the team developed a mechanism called "latent context sharing." This process involves creating shared representations within the AI model that allow it to selectively retain or discard specific types of information. By doing so, the model can be fine-tuned to perform tasks more efficiently while minimizing the impact of sensitive or outdated data.

This approach not only enhances the model's ability to forget irrelevant information but also improves its overall performance in specialized tasks. The researchers demonstrated that their method could significantly reduce the computational resources required for training and deploying AI models, making it more feasible to integrate into a wide range of applications.

## Benefits Beyond Technical Innovation

The practical implications of this research are vast and far-reaching. Here are some key benefits of machine unlearning:

### 1. Enhanced Efficiency in Model Development

Selective forgetting allows AI developers to create faster and more resource-efficient models. By pruning unnecessary data, the process of training and deploying AI systems becomes less energy-intensive, which is particularly important as the demand for AI grows.

### 2. Improved Privacy protections

AI models often train on massive datasets that may contain sensitive information, including private or historical data. The ability to selectively forget specific categories of data provides a robust solution for ensuring user privacy. This is especially relevant in industries where data breaches and misuse pose significant risks, such as healthcare and finance.

### 3. Ethical Considerations

The development of machine unlearning addresses one of AI's most pressing ethical challenges: the "right to be forgotten." As laws and regulations continue to evolve around data privacy, this method offers a practical solution for removing sensitive information from AI models without resorting to costly retraining efforts.

## Challenges and Future Directions

While the study represents a significant milestone in AI research, several challenges remain. One of the primary limitations is the complexity of implementing selective forgetting in large-scale models. The process requires careful optimization and fine-tuning to ensure that the model retains enough information to perform its tasks effectively while still allowing for selective forgetting.

In addition, further research is needed to explore the long-term implications of machine unlearning. For example, how will this technology impact the stability and reliability of AI systems in dynamic environments? What new ethical dilemmas may arise as we continue to develop and deploy these advanced models?

## Conclusion

The work led by Dr. Akiyoshi K lez represents a major step forward in the field of machine unlearning. By demonstrating that AI models can selectively forget specific categories of data, this research opens up new possibilities for creating more adaptable, efficient, and secure AI systems. As the global race to advance AI technology intensifies, initiatives like this one are crucial for addressing both the technical and ethical challenges associated with this transformative field.

In the coming years, it will be important to continue exploring innovative solutions like machine unlearning to ensure that AI remains a force for good in an increasingly complex world. The potential applications of this technology are vast, ranging from healthcare and finance to education and entertainment, each offering unique opportunities to improve lives while maintaining high standards of ethical responsibility.

As we move forward, it is essential to balance the pursuit of technical innovation with a deep understanding of its societal impact. By doing so, we can harness the power of AI to create a future where technology serves humanity, not the other way around.

Dr. Akiyoshi K lez and his team's groundbreaking work offer hope for a more adaptable and ethical AI future. As this research continues to evolve, it will undoubtedly play a key role in shaping the direction of AI development for years to come.

---

### References

- K lez, A., et al. "Rewriting Neural Knowledge Through Black-Box Vision-Language Model Forcibly Unlearned By Proximal Gradient Descent." *Nature Machine Intelligence*, 2023.

- Previous studies on machine unlearning and data privacy.